LinkedIn was doing an article on AI in VFX, but the comment field was small, and I sometimes ramble. So rather than making a more concise comment, I just posted it here.

The history of the “AI Treadmill” is always important to keep in mind when we get into a hype cycle.

In the 1950’s, expression parsing was considered AI because humans could do it, but we hadn’t yet figured out how to make computers do it. Concepts like syntax trees and the notation of ‘*’ for pattern matching come from linguistics research and got adapted for Computer Science by early AI researchers. Today, they underly parsing for basically every kind of script and file format. Every little Python script or expression knob is using “AI” according to an AI researcher who just woke up from a very long nap.

In the 1980’s, computer vision was a hot topic in AI research. In the 1990’s, traditional VFX was predictably upended by technology from AI research, again. Before tools like Boujou became available, doing manual frame-by-frame 3D tracking was a niche. Then that niche was gone, making up a small part of the VFX workload for a few frames here and there when the automated tracker glitched.

Today, Deep Learning technology from AI research is the thing predictably disrupting VFX, yet again, as has always been the case. If anything, we’ve been in an unusually stable period in the last decade or so which makes us long overdue for a massive disruption that changes the way the work is done. Change is, as it ever was, coming for your job. Change was coming for your job last year. And change will be coming for your job next year. That part never changes. We’ve just grown so used to the past changes that they all seem like they must have always been obvious, so we forget how disruptive they were in their own ways over the years.

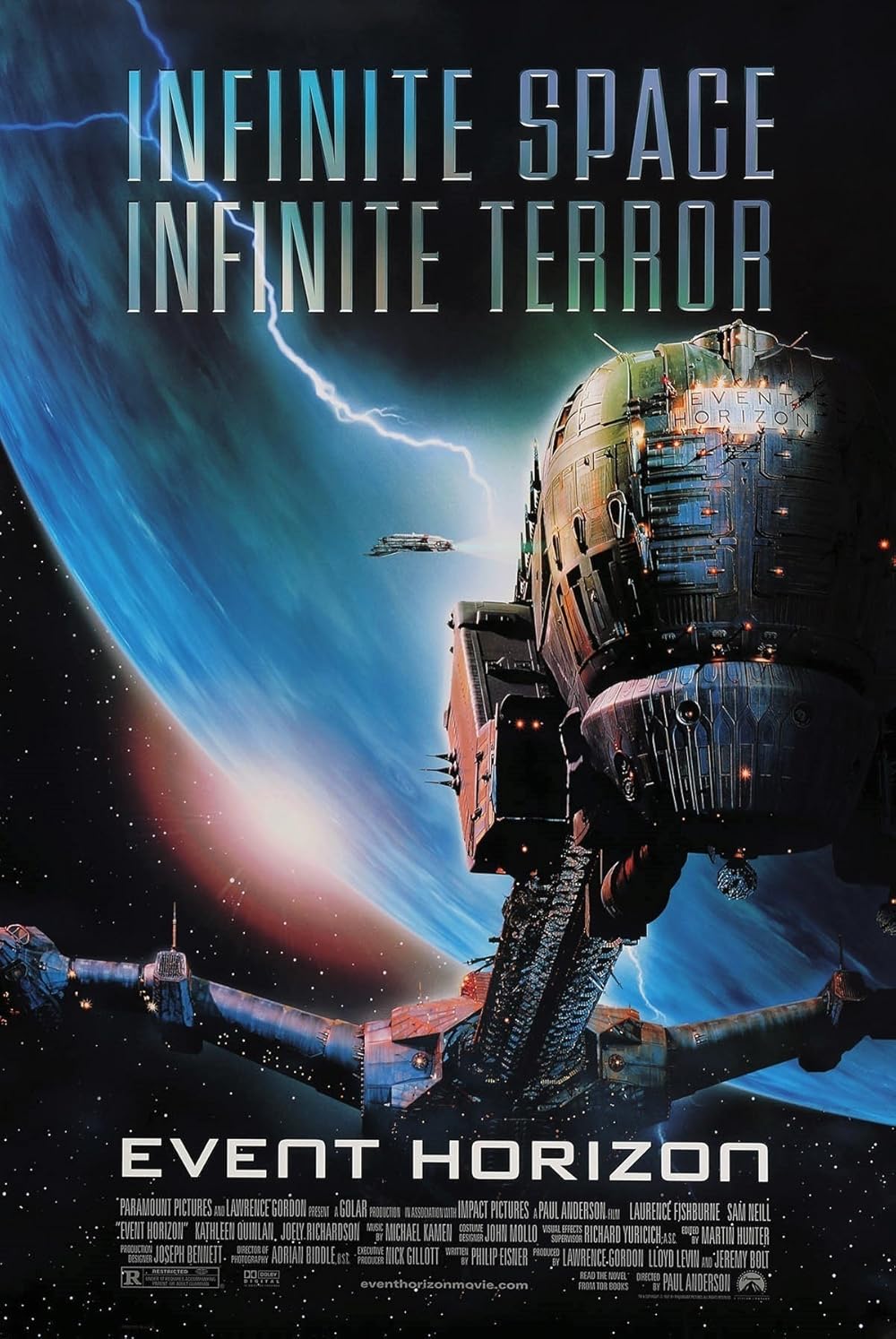

Maybe this time is different. Maybe this time we’ll build Sky Net and Sky Net will build Terminators, and this time it won’t just be happening on screen. But probably not.

Photogrammetry will make it easier to put real things in 3D spaces. And to put 3D things in images of real spaces. But it still takes humans to think up interesting things that we’ll actually care about seeing rendered in spectacular visual effects. That aspect is often missed in a lot of the research papers. New techniques are often so tied to using ground-truth in training the models that they wind up focused on being able to render stunningly realistic versions of things that I could already see without needing a 3D render. I have plenty of spoons in a drawer in my kitchen. I can see plenty of spoons on the local coffee shop. A paper on a more realistic way to render a spoon faster is interesting as far as it goes. But two hours of realistic spoons won’t win any Oscars. If it could, we could have seen two hours of footage of actual spoons. Modern software makes it easier to make Bullet Time sequences than ever before. But it still takes a human to create a film like “The Matrix” that muses about the possibilities of the human condition that arise if you assume that there is no spoon.